-

A GitHub Copilot AI Anecdote

I’ve been watching Three Body—the 2023 Chinese television adaptation of the same Liu Cixin science fiction novel that Netflix also put out a version of. My copy was found on the high seas shortly after the release of the series, but it’s now available on a few streaming services. I was about 20 minutes into watching Episode 13 when the subtitles suddenly lost their sync with the video; text appeared on screen about 15-20 seconds after it was spoken. I tried Plex’s feature for downloading alternate subtitles, but each one exhibited the same problem. I spent $2 to just buy that episode from Amazon, skipped ahead to where I had left off, and even that had the same misaligned subtitles. This post is the story of how, having failed to buy my way out of the problem, I used AI to fix it.

I have a subscription to GitHub Copilot through my work, so after a rough assessment of the problem I opened the offending srt caption file in Visual Studio Code and submitted this prompt in the chat window:

The srt file I have open is incorrect; all captions are set 15 seconds too late beginning with caption number 225. Please correct the srt file, altering the time codes for all caption entries beginning with 225, to have them appear on screen 15 seconds earlier.

What I wanted was for the robot to just echo out a repaired srt file, but instead it offered a Python script that could fix the problem. Apologies for the spoiler, but anyone skimming this post for a solution to their own caption file problems might want to skip a bit farther down:

To adjust the time codes in an SRT file, you can read the file line by line, identify the lines that contain time codes, and adjust the time codes as needed. Here’s a Python script that does that:

import re from datetime import timedelta # Open the SRT file with open('Three-Body.S01E13.CHINESE.WEBRip.x264-ION10[eztv.re].srt', 'r') as file: lines = file.readlines() # Regular expression to match time codes time_code_regex = re.compile(r'(\d{2}:\d{2}:\d{2},\d{3}) --> (\d{2}:\d{2}:\d{2},\d{3})') # Time delta to subtract time_delta = timedelta(seconds=15) # Adjust the time codes for i in range(len(lines)): # Check if the line is a time code line match = time_code_regex.match(lines[i]) if match: # Check if the caption number is 225 or higher caption_number = int(lines[i-1].strip()) if caption_number >= 225: # Parse the start and end times start_time = datetime.strptime(match.group(1), '%H:%M:%S,%f') end_time = datetime.strptime(match.group(2), '%H:%M:%S,%f') # Subtract the time delta start_time -= time_delta end_time -= time_delta # Format the new time code new_time_code = f'{start_time.strftime("%H:%M:%S,%f")[:-3]} --> {end_time.strftime("%H:%M:%S,%f")[:-3]}' # Replace the line with the new time code lines[i] = new_time_code # Write the adjusted lines back to the file with open('Three-Body.S01E13.CHINESE.WEBRip.x264-ION10[eztv.re].srt', 'w') as file: file.writelines(lines)This script opens the SRT file, reads all the lines into a list, and then iterates over the list. For each line, it checks if the line is a time code line and if the caption number is 225 or higher. If both conditions are met, it parses the start and end times, subtracts 15 seconds from each, and replaces the line with the new time code. Finally, it writes the adjusted lines back to the file.

Please replace

'Three-Body.S01E13.CHINESE.WEBRip.x264-ION10[eztv.re].srt'with the path to your SRT file if it’s different.The script looked decent enough at a glance, although it didn’t include any details about how to run the script. I knew to put it in a

.pyfile, and although I have very little experience with Python I could guess that the script might need to be run withpython3rather thanpythonon my Mac laptop (which turned out to be true, but perhaps due to some quirk of my own setup). The script also edits the file in-place, rather than saving the result out to a new file, which I would consider bad practice; arguably it should take the srt filename as an input parameter, but then again we’re just trying to solve a single, weird, one-off problem. So we’ll deduct a couple of “ease of use” and “best practice” points, but more importantly the script didn’t work!First, it failed to import the “datetime” module—the syntax highlighting in VS Code made that obvious enough, but the IDE’s proposed fix of

import datetimewasn’t correct, either. So I addedfrom datetime import datetimeto the top of the file and ran the script against my local copy of the srt. I didn’t bother to look very closely at the result—in my defense, it was 10:30pm and I was in “watching tv” mode, not “I am a software engineer” mode—and copied it to the Plex server. I restarted playing the episode where I left off and… now there weren’t any subtitles at all!Let’s look at a snippet of the edited srt file to see if we can spot the problem:

223 00:16:25,660 --> 00:16:30,940 Correcting them is what I should do. 224 00:16:31,300 --> 00:16:45,660 At that time, I thought my life was over, and I might even die in that room. 225 00:16:51,020 --> 00:16:54,500This is the person you want. I've handled all the formalities. 226 00:16:54,500 --> 00:16:56,420You know the nature of this, right?Subtitle formats are actually pretty easy to read in plaintext, which I appreciate. And a glance at the above snippet shows that GitHub Copilot’s script resulted in the timestamp of each entry running immediately into the first line of text of that caption. I’m trying to keep this relatively brief, so I’ll just note that a cursory search turned up a well-known quirk of Python’s

file.readlinesmethod (which reads a file while splitting it into individual lines of text), which is that it includes a “newline” character at the end of each line—and so the correspondingfile.writelinesmethod (which writes a list of lines out to a file) assumes that each line will end with that “now go to the next line” character if necessary. As someone who doesn’t often use Python, that’s an unexpected behavior, so to me this feels like a relatable and very human mistake to make. But anyone used to doing text file operations in Python might find it a strangely elementary thing to miss.After adding the

datetimeimport, fixing the missing linebreak, changing the script to save to a separate file with an “_edited” suffix, and adjusting the amount of time to shift the captions after some trial and error (not Copilot’s fault), we end up with this as the functioning script:import re from datetime import timedelta from datetime import datetime # Open the SRT file with open('Three-Body.S01E13.CHINESE.WEBRip.x264-ION10[eztv.re].srt', 'r') as file: lines = file.readlines() # Regular expression to match time codes time_code_regex = re.compile(r'(\d{2}:\d{2}:\d{2},\d{3}) --> (\d{2}:\d{2}:\d{2},\d{3})') # Time delta to subtract time_delta = timedelta(seconds=18) # Adjust the time codes for i in range(len(lines)): # Check if the line is a time code line match = time_code_regex.match(lines[i]) if match: # Check if the caption number is 225 or higher caption_number = int(lines[i-1].strip()) if caption_number >= 225: # Parse the start and end times start_time = datetime.strptime(match.group(1), '%H:%M:%S,%f') end_time = datetime.strptime(match.group(2), '%H:%M:%S,%f') # Subtract the time delta start_time -= time_delta end_time -= time_delta # Format the new time code new_time_code = f'{start_time.strftime("%H:%M:%S,%f")[:-3]} --> {end_time.strftime("%H:%M:%S,%f")[:-3]}\n' # Replace the line with the new time code lines[i] = new_time_code # Write the adjusted lines back to the file with open('Three-Body.S01E13.CHINESE.WEBRip.x264-ION10[eztv.re]_edited.srt', 'w') as file: file.writelines(lines)I saved that as

fix_3body.pyin the same directory as the srt file, and ran it from a terminal (also in that directory) withpython3 fix_3body.py. And that did work—my spouse and I got to finish watching the episode. Hooray! I’m not going to share the edited srt file, but if you’re stuck in the same situation with episode 13, this should get you most of the way to your own copy of a correct-ish subtitle file (I don’t think the offset is exactly 18 seconds, but close enough).I’ll close with a few scattered thoughts:

- I wonder what caused the discrepency? My best guess is that the show was edited, the subtitles were created, and then approximately 18 seconds of dialog-free footage was removed from the shots of Ye Wenjie being moved from her cell and the establishing shot of the helicopter flying over the snowy forest.

- Overall this felt like a success. As I said, I was not really geared up for programming at the time, and while I could have written my own script to fix this subtitle file, it would have taken a while to get started: what language should I use? How exactly does the SRT format work? How can I subtract 18 seconds from a timecode with fewer than “18” in the seconds position (like

00:16:07,020)? Should I have it accept the file as an input parameter? Maybe have it accept which caption to start with as an input parameter? Should that use the ordinal caption number or a timestamp as the position to start from? Even if I wouldn’t have made the same choices as Copilot for those questions, it got me to something nearly functional without my having to fully wake up my brain. - Of course, I did have to make at least two changes to make this script functional. Python is very widely used, especially in ML/NLP/AI (lots of text-mangling!) circles, and GitHub Copilot seems to be considered the “smartest” generally-available LLM fine-tuned for a specific purpose like this. Savita Subramanian, Bank of America’s head of US equity strategy, recently asserted on Bloomberg’s Odd Lots podcast (yt) that, “we’ve seen … the need for Python Programming completely evaporate because you can get … AI to write your code for you”. And not to pick on her in particular, but in my experience with AI thus far that’s false, especially the “completely evaporate” part. I’m a computer programmer, so you can discount that conclusion as me understanding in my own interest if you’d like.

- I’m not sure there’s a broader lesson to be taken from this, but it struck me that a broken subtitle file feels like the kind of thing that one should expect when sailing the high seas for content. I was surprised when I saw that even Amazon’s copy of the episode had broken subtitles. In the end, controlling a local copy of the episode and its subtitles allowed me to fix the problem for myself. And in fact this kind of strange problem has been surprisingly common on streaming services. My family ran into missing songs on the We Bare Bears cartoon, and Disney Plus launched with “joke-destroying” aspect ratio changes to The Simpsons, just off the top of my head. I don’t believe I can submit my repaired subtitle file to Amazon, but it seems like there’s some kind of process in place to submit corrections to a resource like OpenSubtitles.org.

-

One of the Most University of Chicago Things I Have Ever Read

Well, most humanities at The University of Chicago. This New Yorker profile of Agnes Callard from 2023 is really about one person and her family, but also has so much of what I remember:

- People determined to be honest, deliberate, and thoughtful about their lives.

- Children of faculty who are in a very unusual situation, but seem happy.

- “Night Owls” didn’t exist during my time there, but a late-night conversation series hosted by a philosophy professor sounds like something that I, and many others, would have loved.

- Minimal acknowledgement that one’s intense self-examination and experience may not be generalizable.

- Or maybe I mean something that feels like a strange combination of curiosity and insularity?

- Mental health diagnosis.

- The strong and often-indulged impulse to philosophical discussion.

- Horny professors.

- The kind of restless, striving determination that creates CEOs and political leaders, but applied to something conceptual and niche.

- An figure who is both a dedicated and recognized theoretician in their academic field, as well as the subject of (what sounds like) a kind of gossipy celebrity culture on campus.

It was not a surprise to learn that Dr Callard was also an undergraduate in Hyde Park (before my time). She sounds like the kind of frustrating and exhausting person to be around that I’ll admit I sometimes miss having in my life.

-

When do we teach kids to edit?

My 10 year-old recently had to write an essay for an application for a national scholarship program. He did great, writing 450ish words that were readable, thoughtful, and fun, with pleasantly varying sentences. And then we started editing.

I remember feeling like giving up in my early editing experiences, out of sorrow for the perfect first-draft gems being cut and out of bitterness at (generally) my Mom for having the temerity to suggest changes; if my essay wasn’t good enough then she was welcome to write a different one. My kiddo handled it better than I remember dealing with early editing experiences, by thinking through changes with my spouse without losing confidence in his work. But seeing his reactions and remembering my own made me think about how hard it is to get used to editing your writing.

For a kid, and especially one who tends to have an easy time with homework, it’s annoying to have an assignment that feels complete magically turn back into an interminable work in progress as the editing drags on. But that’s the least of editing’s problems! If the first draft was good, then why edit it all—isn’t the fact of the edits an indication that the original was poor work? How can a piece of writing still be mine if I’m incorporating suggestions from other people? Editing for structure and meaning is especially awful, like slowly waking up and realizing that the clarity you were so certain you’d found was just a dream after all.

I’ve seen my kids edit their work in other contexts: reworking lego creations, touching up drawings, or collaboratively improving Minecraft worlds. So maybe there’s something specific to editing writing that’s more challenging—communication is very personal after all. Or perhaps the homework context is the problem—it is a low-intrinsic-motivation setting. Maybe I just think editing is hard because my kid and I were both pretty good at writing, and some combination of genetics and parenting led to us both having a hard time with even the most gently constructive of criticism. There’s certainly some perfectionism being passed down through the generations in one way or another.

My other child is five years old and is, if anything, even more fiercely protective of her creative work. I want to make this better for her, to get her used to working with other people to evaluate and improve something she has made. But how do you do that as a parent? Should I just start pointing out flaws in my kindergartener’s craft projects? (No, I obviously should not.)

Maybe what’s really changing as kids hit their pre teen years is that creative work must increasingly achieve goals that can be evaluated but not measured. Editing starts out as fixing spelling or targeting a word count, but those are comparatively simple tasks. Does your essay convey what you wanted it to? Is it enjoyable to read? Does your drawing represent your subject or a given style as you intended? I would guess that such aims of editing tend to be implicit for highly-educated parents with strong written communication skills, and not at all obvious to an elementary school student. I’m not sure how much groundwork can be laid for these questions in kindergarten, but the next time I’m editing something with one of my kids, I think I’ll try explicitly introducing those goals before getting out the red pen.

-

Was the Cambrian JavaScript Explosion a Zero Interest Rate Phenomenon?

A kind of cliché-but-true complaint common among programmers in the late 2010’s was that there were too many new JavaScript things every week. Frameworks, libraries, and bits and pieces of tooling relentlessly arrived, exploded in popularity, and then receded like waves on a beach. My sense is that this pace has slowed over the last few years, so I wonder: was this, too, a ZIRP phonemon, and thus a victim of rising interest rates along with other bubbly curiosities like NFTs, direct-to-consumer startups, food delivery discounts, and SPACs?

I’ll admit: I can’t say I’m basing this on anything but anecdotes and vibes. But the vibes were strong, and they were bubbly. People complained about the proliferation of JS widgets not because of the raw number of projects, but because there was a sense that you had to learn and adopt the new hotness (all of which still seem to be going concerns!) in order to be a productive, modern, hirable developer. I think such FOMO is a good indicator of a bubble.

And I think other ZIRP-y factors helped sustain that sense that everyone else was participating in the permanent JavaScript revolution. What could interest rates possibly have to do with JavaScript? Let’s say you have $100 to invest in either a startup that will return a modest 2x your investment in 10 years, or a bank account. If interest rates are 7%, your bank account will also have (very nearly) doubled, and of course it’s much less risky than a startup! If interest rates are 2%, your $100 bank account will only end up about $22 richer after a decade, so you might as well take a chance on Magic Leap. It also means that a big company sitting on piles of cash might as well borrow more, even if they don’t particularly know what to do with it. So all of that is to say: low interest rates meant there was cheap money for startups and tech giants alike.

Startups, for their part, are exactly the kind of fresh start where it really does make sense to try something new and exciting for your

interactive demoMVP. If you’re a technical founder with enough of a not-invented-here bias—a bias I’ll admit to being guilty of—then you might well survey the JavaScript landscape and decide that what it lacks is your particular take on solving some problem or other. Here’s that XKCD link you knew was coming.As for the acronymous tech giants, there’s been recent public accusations that larger firms spent much of the 2010s over-hiring to keep talent both stockpiled for future needs and also safely away from competitors (searches aren’t finding me much beyond VC crank complaints, but I’d also gotten this sense pre-pandemic). Everyone loves a good “paid to do nothing” story, but I think most programmers do want to actually contribute at work. A well-intentioned, ambitious, and opinionated programmer might well pick up some slack by refactoring or reimplementing some existing code into a brand new library to share with world. If nothing else, a popular library is a great artifact to present at your performance review.

I started thinking about this in part because it frustrates me how much programming “content” (for lack of a better word) seems to be geared towards either soldiers in corporate programmer armies or individuals who are only just starting their company or their career: processes so hundreds can collaborate, how-to’s fit only for a blank page, or advice for your biannual job change. There’s probably good demographic reasons for this; large firms with many programmers have many programmers reading Hacker News. But something still feels off about this balance of perspectives, and you know what? Maybe it’s all been a bubble, my opinions are correct, and it’s the children who are wrong.

As interest rates proved to be less transient and some software-adjacent trends were thus shown to have been bubbles, a popular complaint was to compare something like crypto currency to the US railroad boom (and bust) of the 19th century—the punchline being that at least the defunct railroads left behind a quarter-million miles of perfectly usable tracks.

So what did we get for the 2010’s JavaScript bubble? Hardware (especially phones), browsers, ECMAScript, HTML, and CSS all gained a tremendous amount of performance, functionality, and complexity over the decade, and for the most part a programmer can now take advantage of all that comparatively easily. The Hobbesian competition of libraries left us with the strongest, and it’s now presumably less complicated trying to decide what to use to format dates.

Then again, abandonware is now blindly pulled into critical software via onion-layered dependency chains, and big companies have taken frontend development from being something approachable by an eager hobbyist armed with a text editor, and remade it in their own image as something to be practiced by continuously-churning replaceable-parts engineers aided by a rigid exoskeleton of frameworks and compilers and processes.

So yes, “in conclusion, web development is a land of contrasts“. To end on a more interesting note, there are two projects that I think could hint at what the next decade will bring. Microsoft’s Blazor is directly marketed as a way to avoid JavaScript altogether by writing frontend code in C# and just compiling it to WebAssembly (yes, that’s not always true because as usual Microsoft gave the same name to four different things). Meanwhile htmx tries to avoid this whole JavaScript mess by adding some special attributes to HTML to enable just enough app-ness on web pages. I would never bet against JavaScript, but it’s noteworthy that both are clearly geared towards people frustrated with contemporary JS development, and it’ll be interesting to see how much of a following they or similar attempts find over the next few years.

-

Prevent Unoconv From Exporting Hidden Sheets From Excel Documents

At work we use unoconv—a python script that serves as a nice frontend to the UNO document APIs in LibreOffice/OpenOffice—as part of our pipeline for creating preview PDFs and rasterized thumbnail images for a variety of document formats. Recently we had an issue where our thumbnail for a Excel spreadsheet document inexplicably didn’t match the original document. It turned out that the thumbnail was being created for the first sheet, which didn’t display in Excel because it was marked hidden. I couldn’t find any toggle or filter to prevent unoconv from “printing” hidden sheets to PDF, and ended up having to just add a few lines to unconv itself:

# Remove hidden sheets from spreadsheets: phase = "remove hidden sheets" try: for sheet in document.Sheets: if sheet.IsVisible==False: document.Sheets.removeByName(sheet.Name) except AttributeError: passI added that just before the “# Export phase” comment, around line 1110 of the current version of unoconv (0.8.2) as I write this.

I don’t know why this wasn’t the default behavior in LibreOffice, since non-printing export targets like HTML correctly skip the hidden sheet—shouldn’t your printout match what you see on screen?

I’m sharing this as a blog post (rather than submitting a pull request) because unoconv is now “deprecated” according to its GitHub page, which recommends using a rewrite called unoserver in its place. That sounds nice, but for now our unoconv setup is working nicely, especially now that we aren’t creating thumbnails of hidden sheets!

-

Playing Marathon (via Aleph One) With Controllers on RetroPie

Bungie released Marathon in 1994, the same year Doom II came out. Marathon was also a first-person “run around picking up guns to kill aliens with” game, but had a comparatively robust plot with multiple conflicting AI characters, multiple settings, a relatively fleshed-out picture of the bad guys, effectively moody atmospheric music, and some additional universe flavor text here and there.

I never really played much of Doom II, so I’m not sure if Marathon’s gameplay was also a step above. But it was beloved by Macintosh users because it was a rich, advanced FPS with fantastic network play levels, which was exclusive to a platform that was usually left behind by game publishers. Apple wasn’t at the top of their game in that beige Performa, pre- Steve Jobs return era, but Marathon was a celebrated bright spot.

Not long before Microsoft bought them in 2000, Bungie GPL’d the Marathon 2 game engine, which is what the current Aleph One project originated from. Bungie later released the whole trilogy of Marathon games for free, so the scenario/content files can now be distributed with the engine (though I don’t know the license details). They’re still 25-30 year-old games, so (for example) there aren’t many save points, but the open source project has been very successful at making them pleasant to play decades later.

Since the pandemic my household has used a Raspberry Pi 4B running RetroPie as a retro game emulation system, so I was excited to see that RetroPie has a built-in option to install the Aleph One Marathon games under “ports”. These installs do run, and are playable with a keyboard, but they never worked with our 8BitDo bluetooth controllers. Until today, that is! I finally figured out how to get them running with our controllers, so I thought I should write down what I did.

Aleph One uses SDL, a software library used by many games (among other things) to work with hardware components like controllers, keyboards, displays, etc. If your Linux install of Aleph One is also failing to work with controllers, you might find log entries like this:

No mapping found for controller "8Bitdo SN30 Pro" (05000000c82d00000161000000010000) (joystick_sdl.cpp:66)On our RetroPie this was in the file

/opt/retropie/configs/ports/alephone/Aleph One Log.txt. The “joystick_sdl.cpp” in the log entry is the clue that this is an SDL issue, and that led me to a helpful guide on Reddit for making controllers work with SDL games on Linux.That included a link to a script to download the SDL game controllers database, grab the Linux section, save it to a dot file in the home directory called

.controller_config, and then add a line to your .bashrc (or similar) to add those controller mappings as an environment variable called “SDL_GAMECONTROLLERCONFIG”. I ran that script on our RetroPie box.To briefly explain what this is for: one of SDL’s jobs is to take input from many different kinds of controllers and present them to games as, simply, “D-Pad Up”, “D-Pad Down”, “A Button”, “B Button”, etc. That game controllers database has the actual mappings for that—it basically says, “controller XYZ identifies itself with the number 1234, and on that controller the right trigger is button code b4”, etc, etc, for all of the buttons on all of those controllers. By adding those mappings as an “environment variable” (a value available to every program that you run), you’re telling the SDL code in the game you’re trying to play how to work with the buttons of your controller.

Adding that entire controllers database as an environment variable presumably must work in many cases, but it’s also a bit blunt. The Linux section of that controller database is over 500 lines long! In fact, it’s so long that adding all of that to your environment variables will make ordinary bedrock tools like

rmormvbreak with “argument list too long” errors. If that happened to you and now you’re panicking because you can’t even delete your.controller_configfile, you just need to un-set that environment variable by runningunset SDL_GAMECONTROLLERCONFIGin a terminal.Anxious to see if I could get things working, tonight I just took the

.controller_configfile generated by the script above and deleted everything except for the couple dozen 8BitDo lines (since that’s the brand of all of our assortment of controllers). You should similarly trim your controller file down—just remember to leave the very first line and the very last line of the file untouched, or to otherwise make sure that the file still begins withexport SDL_GAMECONTROLLERCONFIG="and ends with a closing quote mark.You could improve upon my blunt edit by looking in

.controller_configfor the long numeric string(s) in your Aleph One Log.txt “joystick_sdl.cpp” error, and just including the matching line(s)—in my error example above the number to look for would be “05000000c82d00000161000000010000”.So now you should have a

.controller_configfile with a small subset of the giant game controller mapping database, and a line like this in your .bashrc (or similar) file:. "$HOME/.controller_config"To make your controllers work with Marathon when launched from EmulationStation (the frontend used by RetroPie), however, you’ll need to copy that line to the launch scripts for the Marathon ports. Those are in

~/RetroPie/roms/ports/, with names likeAleph One Engine - Marathon.sh. In that file, you need to add that line from your .bashrc as the second-to-last line (just before the long final line that runs the game). Here it is again:. "$HOME/.controller_config"That’s a period for the first character—you’ll need to copy that line exactly, including that period and the quotes.

But once you do that, you should be able to run the Marathon ports from RetroPie and have your wireless controllers work!

Another time I’ll have to improve upon this. PortMaster (a system for installing ports on handheld emulator consoles) does a great job of handling controller mapping for games that use SDL, so their scripts are probably something I should look at. Ideally, the Marathon launch scripts would look at what controllers are attached (possibly with

evtest), look up only those lines in the game controller database, and then set those mappings to the SDL_GAMECONTROLLERCONFIG environment variable before launching the game. Presumably that controllers database already exists somewhere in a normal RetroPie distribution? Who knows—for now I’ve got a score to settle with the Pfhor still occupying the colony ship Marathon. -

Adjusting the Rangefinder on a Seagull 203-I

I recently got a Seagull 203-I, a Chinese-made medium format folding camera with a coupled rangefinder. I haven’t seen results out of it yet, but I’m excited to use it. It takes a special occasion to get me out of the house with a camera larger than jacket pocket size, so something that just barely crams into a men’s jeans butt pocket (ymmv) and offers some focus assurance is a great combo for me.

Of course, that rangefinder won’t help me to nail focus if it’s not adjusted properly. I didn’t find existing instructions online for setting the horizontal alignment of a Seagull 203 rangefinder, but with no other option I managed to figure it out myself, so here’s a brief account of how I did it. Again, I’m working with a Seagull 203-I, a later model with a flash hotshoe and a shutter without EV coupling. Seagull 203 cameras of one stripe or another seem to have been made from about the 1960’s into the 1990’s, but I haven’t heard of any particular changes to the rangefinder coupling during that time, so odds are this should also work for other variants.

Open the folding shutter door, and look at the camera straight-on below the shutter apparatus. You should see a cylindrical flathead screw. As you turn the focus wheel, a specially shaped side of it pushes that screw more or less; I’m not a mechanical engineer, but I believe this arrangement makes the focus wheel a cam, and the screw a cam follower. A spring pulls up the lever the screw is attached to, so that it properly follows the cam. The level that’s moved by the cam follower screw pushes down another lever at a right angle to it. This lever, which runs from about the back end of the shutter into the camera body, is part of additional linkages that transmit that motion to the rangefinder mirror.

OK, so that’s roughly how it works, let’s get down to aligning. Set the focus to infinity and look through the rangefinder at something very far away. If you’re reading this, then probably the double-image isn’t quite lined up. Now look at that screw again and see if you notice anything. Perhaps you noticed an irregularly shaped collar around the base of the screwhead? That’s right, fire up the old Xzibit memes, because your Seagull heard you loved cams, and got you a cam for your cam follower. Adjust that screw (a *tiny* amount) and that collar will nudge the lever up or down a smidge in relation to the cam follower screw head. For me, turning the screw a bit counter-clockwise nudged the ghost image to the left.

That’s it! I hope this helped. A couple notes here at the end, though:

- That screw only adjusts the horizontal alignment. The vertical alignment of my rangefinder is also a bit off, but not so much that I feel like removing the top plate to deal with it.

- If you adjust your rangefinder, use it properly, and still end up with out-of-focus pictures, your problem may be with your lens. Basically, that it’s not actually focused to infinity when the focus wheel says it is. The process to fix this is called collimating the lens.

-

How to fix “This Apple ID is only valid for use in the U.S. Store”

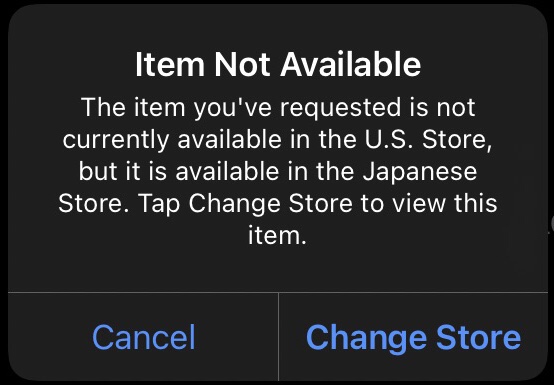

While googling a Japanese musician recently, I followed an Apple Music link, which opened the Music app on my iPad with this message:

Item Not Available: The item you’ve requested is not currently available in the U.S. Store, but it is available in the Japanese Store. Tap Change Store to view this item. This dialog makes the Change Store option seem pretty innocent, and you might assume (like I did) that changing back to your home store would be easy and obvious.

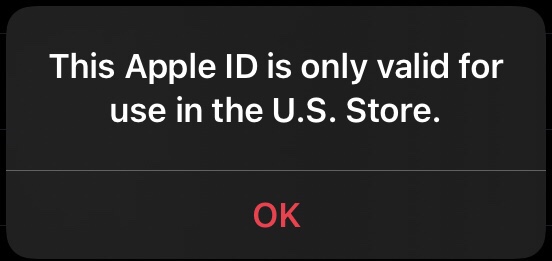

But I found the iOS/iPadOS Music app was “stuck” in the Japanese store after this. My “Browse” suggestions were all from that store, and when I tried to actually play any music in the app, I got this message:

This Apple ID is only valid for use in the U.S. Store. At this point the problem is that if you go searching for how to change what iTunes / App / Apple Music store you are using, all of the results are long, inconvenient processes that involve cancelling all of your subscriptions and backing up all of your purchased media. If you are permanently emmigrating from one country to another and want your account associated with your new home nation’s store, then you really do have to do all that stuff. But if, like I did, you just ended up stuck in the wrong store through viewing an artist or album from a different country, then you haven’t actually changed what country your Apple ID is connected to, so you don’t have to do all of that!

The Fix

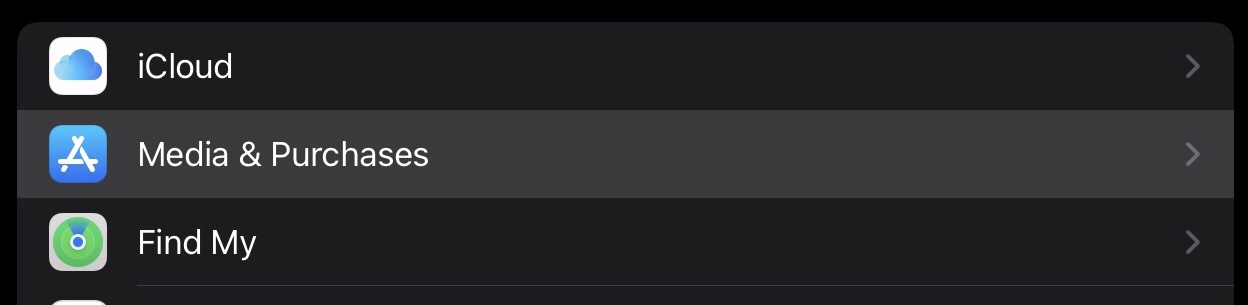

In short: you just have to sign out of the App Store in the iOS Settings app. When you sign back in, it will use the correct stores for whatever country your Apple ID is associated with. To do this, open Settings and select your Apple ID settings. Then tap “Media & Purchases”:

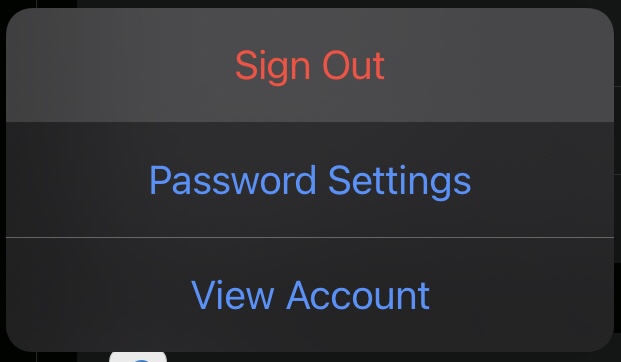

Rather than opening a panel, that will just open a small dialog box that includes a Sign Out option. Tap Sign Out:

It will ask if you’re sure you want to do this—you are. Note that this only signs your account out of the App Store and the media stores; it doesn’t affect anything else on your device using your Apple ID, so it won’t force you to later reload your entire iCloud Photo Library, for example.

Now tap Media & Purchases again. This time it will open a dialog asking if you want to setup Media & Purchases using the Apple ID you’re otherwise using on your device.

Just tap “Continue”, and you’re done! When you sign back in, you’ll be setup to use the stores for whatever country your Apple ID is associated with. I quit the Music app to force it to reload, but I don’t know if that’s necessary. When I opened it again, I was back (for better and for worse) in the U.S. store, and I was once again able to play my music.

I was very relieved that I didn’t have to go through the entire “so you’re migrating to a new country” process outlined in the help documents, but this still seems like a lot of completely unnecessary stress. If Apple wants to let people switch stores easily in order to view music from other countries, they need to have an equally easy and obvious way to switch back. Or better yet, just automatically switch users back to their home country rather that displaying the useless and misleading “This Apple ID is only valid for use in the U.S. Store” message.

-

KickLogstash.sh: 7 Lines to Restart Logstash

It might well be my naive, beginner’s implementation of it, but it sure seems like Logstash is pretty easy to confuse. Elasticsearch temporarily unavailable? Hang. Some weird formatting sneaks in? Hang. Some as-yet-undiscovered circumstance that causes java to eat all your memory? Hang. And each time it hangs, Logstash becomes unresponsive, never recovering. So for the moment, I’ve given up, and I’m resorting to a dumb, sledgehammer-like solution:

#! /bin/sh FILELENGTH=`stat --format=%s /var/log/logstash/logstash.err` if [ $FILELENGTH != "0" ] then killall -9 -u logstash service logstash restart logger Logstash Killed and Restarted Due To Non-Zero Error Log fiAgain, I’ve only had about a week of actually sending a lot of input to Logstash, but so far, there’s always something in the error log when it hangs, and there’s rarely anything in there when things are going well. So this barely-a-script checks the size of the error log, and if it’s non-zero, it SIGKILLs every process belonging to the logstash user, and then tries to start Logstash. The restart part assumes you have a Logstash script in init.d, which I certainly hope will be included as part of a proper .deb distribution of Logstash someday soon. I run it every five minutes with cron:

*/5 * * * * /etc/logstash/kick_logstash.sh

-

Coping With EXIF Rotation Problems

At Knowledge Architecture, we may not operate at the scale of Facebook or Flickr, but Synthesis sees enough image uploads that we’ve run into some pretty strange image rotation problems now and then. I’ve spend a lot of time with the issue, and while I’m not naive enough to declare victory over something as devilish as EXIF rotation gremlins, we’ve got an update rolling out this week that will at least avoid the species we’ve seen so far.

First, a little background, and we’ll imagine a portrait-orientation photo of the Sutro Tower as our example image. Digital images are stored in a given rotation, which generally corresponds to the default rotation of the image sensor—so our Sutro Tower photo is probably sideways on disk. If we open the image in any half-decent viewer, though, the tower will be right-side up because most digital cameras annotate image files using the EXIF Orientation tag. That’s used by most image-viewing things to recognize situations where image may be stored on disk like this, but when displayed it should be (say) flipped, and rotated 90° to look like that.

It’s gotten better, but support for that Orientation value isn’t perfect. So when someone uploads an image to Synthesis, we look for the Orientation EXIF field, rotate the image data itself so that it’s stored the way it should be displayed, and then we remove the Orientation field, because its instruction to rotate the image on display is no longer needed.

The problem we’ve run into is that some image editors apparently don’t update EXIF data properly; they rotate the image data itself (so the Sutro Tower is right-side up), but leave the Orientation flag in place. So our uploading process would dutifullly rotate the image one more time, and the tower would end up pointing sideways. It was a frustrating situation.

Luckily, those EXIF-unaware image editors seem to leave all the other EXIF data unchanged, too. So what our solution does is compare the so-called “EXIF Width” and “EXIF Height” field values to the width and height of the image data on disk. If it looks like the image has already been rotated, we simply remove the Orientation field.

So here’s the important part of the code. In short, we:

- calculate the ratio of actual image width to height, and the ratio of EXIF-reported height to width,

- round them both to one decimal place (to account for resized images subtly changing that ratio),

- and check to see if one equals the other. If so, the image has been rotated already.

This is C# but it’s mostly math so it should be easy enough to port to whatever language you’re using. Not shown is fetching the orientation, exifWidth, and exifHeight values; they’re EXIF fields 0x0112, 0xa002 and 0xa003, respectively. I found this list of EXIF tags very helpful during this whole process.

// Useless data check (this check doesn't work on squares) if (exifWidth > 0 && exifHeight > 0 && exifWidth != exifHeight) { // To see if the bits were rotated, we calculate a height/width ratio, because // we might be dealing with a resized image. We round, becuase resizing might // slightly change this ratio decimal actualRatio = Math.Round( ((decimal)img.Height / (decimal)img.Width) , 1); // height / width decimal exifRatio = Math.Round( ((decimal)exifWidth / (decimal)exifHeight) , 1); // width / height if ( orientation >= 5 && actualRatio == exifRatio ) { // SO, if the orientation is rotate90 or rotate270 (with or without flip), // and the image seems to have already been rotated 90 or 270 (the width // is now the height), we're going to assume that some bad software rotated // the pixels and never removed the orientation flag. In such a case, we'll // trust the lousy software's rotation, and remove the flag. orientationPropItem.Value = new byte[1] { 1 }; img.SetPropertyItem(orientationPropItem); // We return true because in our implementation, we just need to know if the // image file we're storing has changed at all, whether that means rotation // or just removing an EXIF value. return true; } }

-

Subscribe

Subscribed

Already have a WordPress.com account? Log in now.